Artificial Intelligence has become central to the way organisations, governments, and societies operate. From predictive analytics in healthcare to automated decision-making in finance, AI systems are increasingly making choices that carry significant consequences for people’s lives. Yet, as these systems grow more capable and more pervasive, the need for robust governance has never been greater.

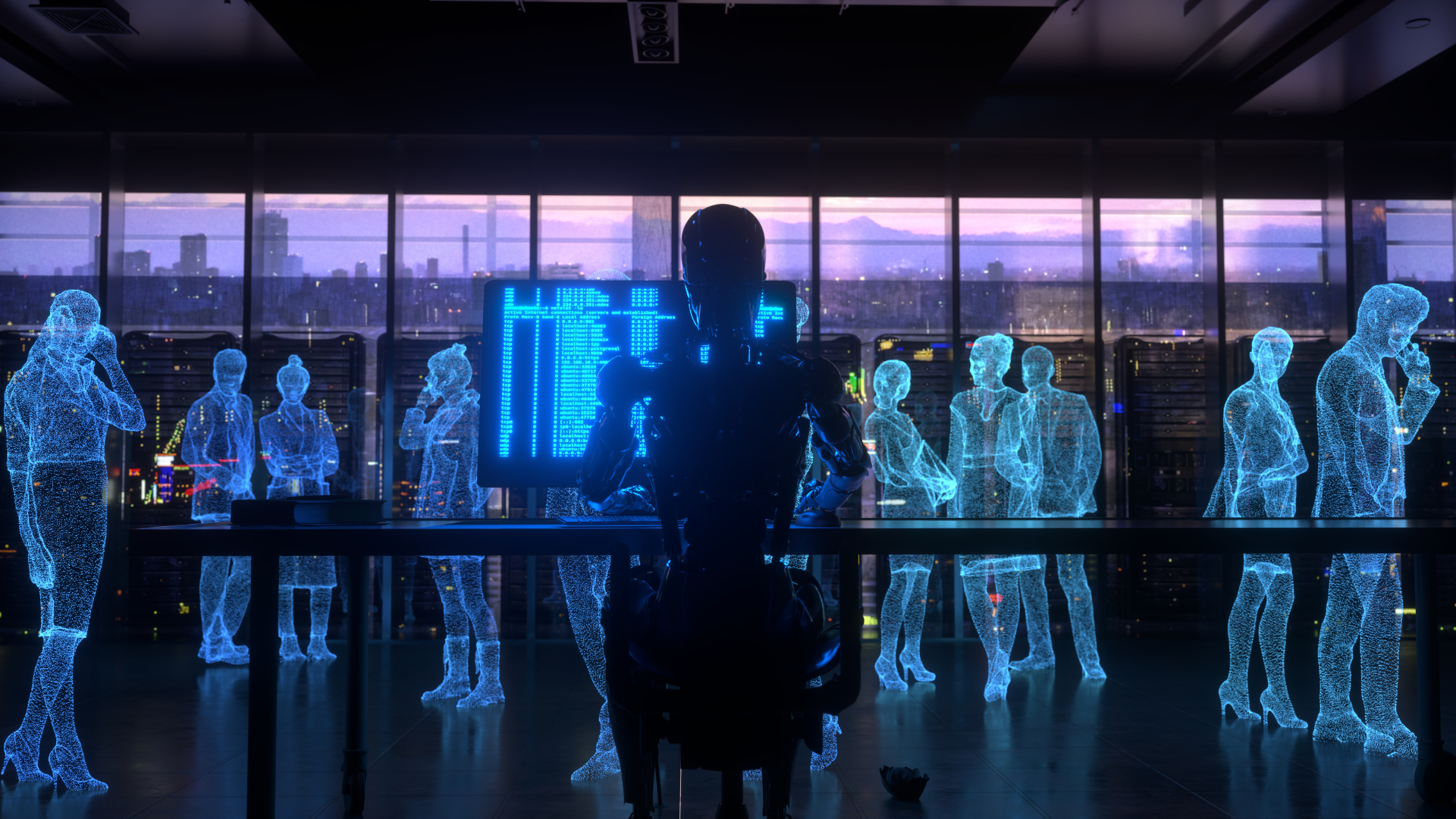

AI governance is often discussed in terms of technical safeguards, regulatory frameworks, or ethical principles. While these are crucial, the conversation frequently overlooks the single most important component: the human factor. No matter how advanced AI becomes, human judgment, responsibility, and values remain at the heart of ensuring AI serves society safely and fairly.

This article explores the essential role of humans in AI governance, the balance between automation and accountability, and why human-in-the-loop AI is not just desirable but indispensable.

Why Humans Still Matter in the Age of Intelligent Machines

The appeal of AI lies in its promise of efficiency, scale, and predictive power. Machines can process data far faster than any human, uncover hidden patterns, and automate tasks that once consumed vast resources. However, AI systems are not infallible. They reflect the data they are trained on, the biases embedded in their design, and the limitations of their algorithms.

The human factor provides what machines cannot: context, empathy, ethical reasoning, and accountability. Humans ask the difficult questions that an algorithm cannot—should we use this model? Are the outcomes fair? What unintended consequences might arise?

In this sense, governance is not just about technical controls but about embedding human judgment into the very fabric of AI systems. This is where the principle of human-in-the-loop AI becomes critical.

Understanding Human-in-the-Loop AI

Human-in-the-loop AI refers to systems where humans remain actively engaged in the decision-making process. Rather than leaving AI systems to operate autonomously, humans monitor, intervene, and provide oversight at crucial stages.

This approach recognises that while AI can automate and accelerate processes, ultimate responsibility must remain with people. Whether it is approving a medical diagnosis, authorising a financial transaction, or assessing a candidate’s suitability for a role, humans act as the safeguard against errors, biases, or unintended harm.

Embedding human-in-the-loop AI into governance is not just a matter of compliance; it is about ensuring trust. Users are more likely to accept AI systems when they know that human oversight is built into the process.

The Human-Centric Approach to AI Governance

The shift towards human-centric AI is a recognition that technology must serve people, not the other way around. Governance frameworks that prioritise human wellbeing, rights, and values provide a clear direction for responsible adoption.

Placing people at the centre of AI governance requires more than symbolic oversight. It demands that human perspectives shape design choices, that societal impacts are evaluated before deployment, and that vulnerable groups are protected from harm.

The goal is not only to prevent harm but to foster positive outcomes, AI that augments human potential, supports fair decision-making, and enhances quality of life.

Accountability and the Human Oversight Imperative

One of the most challenging aspects of AI governance is accountability. When a machine makes a mistake, who is responsible? The developer? The operator? The regulator?

Clear lines of responsibility must be drawn, and this requires human oversight in AI systems. Humans must remain the final authority, capable of reviewing decisions, correcting errors, and assuming responsibility for outcomes.

This oversight is not simply about catching errors after the fact. It is about building proactive checks into the system: monitoring outputs for bias, validating models against ethical standards, and ensuring transparency in how decisions are made.

Without such oversight, governance risks becoming hollow, unable to answer the very questions it was designed to address.

The Human Dimensions of Trust

Trust is a fragile but vital component of AI adoption. People must believe that AI systems are safe, fair, and aligned with their interests. Trust, however, cannot be manufactured by algorithms alone, it requires human stewardship.

This is where AI human factors come into play. Human factors encompass the psychological, social, and cultural dimensions of how people interact with AI systems. Do users understand how the system works? Do they feel comfortable challenging its decisions? Do they see the system as supporting, rather than replacing, their own judgment?

Addressing these factors is essential for governance. A technically flawless system can still fail if people perceive it as untrustworthy or alienating. By integrating human factors into governance, organisations create systems that are not only effective but also accepted.

Ethical Decision-Making and the Limits of Automation

AI is extraordinarily powerful in processing data, but ethics remain uniquely human. Questions of fairness, justice, and societal impact cannot be reduced to lines of code.

Consider recruitment algorithms that unintentionally discriminate against candidates based on gender or ethnicity. The system may be optimised for efficiency, but efficiency without fairness is unacceptable. Here, human-in-the-loop AI ensures that ethical considerations guide decisions, not just technical metrics.

This reinforces the principle of responsible AI governance—governance that is proactive, ethical, and oriented towards the public good. Responsibility cannot be delegated to machines; it must be exercised by humans who are accountable for outcomes.

Balancing Efficiency and Human Oversight

Critics sometimes argue that keeping humans in the loop undermines the efficiency AI promises. Why use AI if humans must still intervene?

This argument misses the point. AI is not meant to replace human judgment but to augment it. While machines excel at processing vast datasets, humans excel at applying context, exercising discretion, and making ethical choices.

The true efficiency lies in the partnership: AI handling the heavy lifting of computation, while humans provide the oversight, validation, and accountability. This balance is at the heart of effective governance and sustainable adoption.

Building Human Capacity for AI Governance

If humans are to play a central role in AI governance, they must be equipped with the knowledge, skills, and confidence to do so. This requires investment in education, training, and institutional capacity.

Key components of building human capacity include:

- AI literacy across all levels of society, ensuring people understand both benefits and risks.

- Ethics training for developers, operators, and decision-makers.

- Transparent communication to build public understanding and trust.

- Interdisciplinary collaboration, bringing together expertise from technology, law, social sciences, and ethics.

These steps ensure that the human factor in AI governance is not symbolic but substantive.

Organisational Structures for Human-in-the-Loop AI

Governance must be institutionalised, not improvised. Organisations need structures and processes that embed human-in-the-loop AI into operations.

Practical steps include:

- Establishing ethics committees that review AI projects before deployment.

- Defining clear accountability structures for AI decisions.

- Implementing audit trails that record both machine outputs and human interventions.

- Creating escalation protocols where human review is mandatory.

By formalising these processes, organisations make human oversight a routine part of governance, rather than an afterthought.

Cultural Considerations in Human-Centred Governance

Governance does not exist in a vacuum. It reflects the cultures and values of the societies that create it. In some contexts, individual autonomy may be prioritised; in others, collective wellbeing may take precedence.

Human-centric AI governance must adapt to these cultural differences while upholding universal values such as fairness, transparency, and accountability. This cultural sensitivity ensures governance frameworks remain relevant and effective across diverse contexts.

Global Implications of the Human Factor

As AI systems cross borders, the human factor in governance becomes even more critical. Different jurisdictions impose different regulations, and global harmonisation remains a challenge.

In this landscape, human judgement is essential for navigating conflicting rules, adapting systems to local contexts, and ensuring that ethical principles are not lost in translation.

This underscores the importance of global collaboration, where humans—not algorithms—negotiate the standards, norms, and practices that will shape the future of AI.

The Future of Human-in-the-Loop AI Governance

Looking ahead, the question is not whether humans will remain part of AI governance, but how their role will evolve. As AI becomes more autonomous, human involvement must become more deliberate, structured, and empowered.

Human-in-the-loop AI will increasingly involve monitoring not just technical accuracy but also ethical alignment and societal impact. Humans will need tools to understand, interpret, and challenge AI systems, ensuring they remain accountable.

The future of governance will be defined not by machines operating independently but by the partnership between human oversight and machine intelligence.

Conclusion: Humans as the True Governors of AI

AI may be powerful, but it is not self-governing. Machines cannot take responsibility for their actions, interpret ethical dilemmas, or anticipate societal consequences. Only humans can do that.

The essence of AI governance lies in the human factor, ensuring that technology serves human values, operates under human oversight, and remains accountable to human societies. By embedding human-in-the-loop AI, embracing human-centric AI, addressing AI human factors, enforcing human oversight in AI, and committing to responsible AI governance, organisations can create systems that are not only efficient but also ethical, trustworthy, and resilient.

AI will shape the future, but it is humans who will determine whether that future is fair, just, and beneficial. Governance is not about constraining AI. It is about empowering humans to guide it wisely.